A Scientific History and Philosophical Defense of the Theory of Intelligent Design

| By: Stephen C. Meyer, Senior Fellow, Discovery Institute; ©October 7, 2008 |

| What is this theory of intelligent design, and where did it come from? And why does it arouse such passion and inspire such apparently determined efforts to suppress it? |

Contents

- 1 The Current Landscape

- 2 A Brief History of the Design Argument

- 3 Darwin and the Eclipse of Design

- 4 Problems with the Neo-Darwinian Synthesis

- 5 The Mystery of Life’s Origin

- 6 Of Clues and Causes

- 7 Inference to the Best Explanation

- 8 Causes Now in Operation

- 9 And Then There Was One

- 10 DNA by Design: Developing the Argument from Information

- 11 What is Information?

- 12 Darwin on Trial and Philip Johnson

- 13 Darwin’s Black Box and Michael Behe

- 14 An Institutional Home

- 15 William Dembski and The Design Inference

- 16 Design Beyond Biology

- 17 Three Philosophical Objections

- 18 An Argument from Knowledge

- 19 Not Analogy but Identity

- 20 But Is It Science?

- 21 Conclusion

- 22 Notes

- 23 References

The Current Landscape

In December of 2004, the renowned British philosopher Antony Flew made worldwide news when he repudiated a lifelong commitment to atheism, citing, among other factors, evidence of intelligent design in the DNA molecule. In that same month, the American Civil Liberties Union filed suit to prevent a Dover, Pennsylvania school district from informing its students that they could learn about the theory of intelligent design from a supplementary science textbook in their school library. The following February, The Wall Street Journal (Klinghoffer 2005) reported that an evolutionary biologist at the Smithsonian Institution with two doctorates had been punished for publishing a peer-reviewed scientific article making a case for intelligent design.

Since 2005, the theory of intelligent design has been the focus of a frenzy of international media coverage, with prominent stories appearing in The New York Times, Nature, The London Times, The Independent (London), Sekai Nippo (Tokyo), The Times of India, Der Spiegel, The Jerusalem Post and Time magazine, to name just a few. And recently, a major conference about intelligent design was held in Prague (attended by some 700 scientists, students and scholars from Europe, Africa and the United States), further signaling that the theory of intelligent design has generated worldwide interest.

But what is this theory of intelligent design, and where did it come from? And why does it arouse such passion and inspire such apparently determined efforts to suppress it?

According to a spate of recent media reports, intelligent design is a new “faith-based” alternative to evolution – one based on religion rather than scientific evidence. As the story goes, intelligent design is just biblical creationism repackaged by religious fundamentalists in order to circumvent a 1987 United States Supreme Court prohibition against teaching creationism in the U.S. public schools. Over the past two years, major newspapers, magazines and broadcast outlets in the United States and around the world have repeated this trope.

But is it accurate? As one of the architects of the theory of intelligent design and the director of a research center that supports the work of scientists developing the theory, I know that it isn’t.

The modern theory of intelligent design was not developed in response to a legal setback for creationists in 1987. Instead, it was first proposed in the late 1970s and early 1980s by a group of scientists – Charles Thaxton, Walter Bradley and Roger Olson – who were trying to account for an enduring mystery of modern biology: the origin of the digital information encoded along the spine of the DNA molecule. Thaxton and his colleagues came to the conclusion that the information-bearing properties of DNA provided strong evidence of a prior but unspecified designing intelligence. They wrote a book proposing this idea in 1984, three years before the U.S. Supreme Court decision (in Edwards v. Aguillard) that outlawed the teaching of creationism.

Earlier in the 1960s and 1970s, physicists had already begun to reconsider the design hypothesis. Many were impressed by the discovery that the laws and constants of physics are improbably “finely-tuned” to make life possible. As British astrophysicist Fred Hoyle put it, the fine-tuning of the laws and constants of physics suggested that a designing intelligence “had monkeyed with physics” for our benefit.

Contemporary scientific interest in the design hypothesis not only predates the U.S. Supreme Court ruling against creationism, but the formal theory of intelligent design is clearly different than creationism in both its method and content. The theory of intelligent design, unlike creationism, is not based upon the Bible. Instead, it is based on observations of nature which the theory attempts to explain based on what we know about the cause and effect structure of the world and the patterns that generally indicate intelligent causes. Intelligent design is an inference from empirical evidence, not a deduction from religious authority.

The propositional content of the theory of intelligent design also differs from that of creationism. Creationism or Creation Science, as defined by the U.S. Supreme Court, defends a particular reading of the book of Genesis in the Bible, typically one that asserts that the God of the Bible created the earth in six literal twenty-four hour periods a few thousand years ago. The theory of intelligent design does not offer an interpretation of the book of Genesis, nor does it posit a theory about the length of the Biblical days of creation or even the age of the earth. Instead, it posits a causal explanation for the observed complexity of life.

But if the theory of intelligent design is not creationism, what is it? Intelligent design is an evidence-based scientific theory about life’s origins that challenges strictly materialistic views of evolution. According to Darwinian biologists such as Oxford’s Richard Dawkins (1986: 1), livings systems “give the appearance of having been designed for a purpose.” But, for modern Darwinists, that appearance of design is entirely illusory. Why? According to neo-Darwinism, wholly undirected processes such as natural selection and random mutations are fully capable of producing the intricate designed-like structures in living systems. In their view, natural selection can mimic the powers of a designing intelligence without itself being directed by an intelligence of any kind.

In contrast, the theory of intelligent design holds that there are tell-tale features of living systems and the universe – for example, the information-bearing properties of DNA, the miniature circuits and machines in cells and the fine tuning of the laws and constants of physics – that are best explained by an intelligent cause rather than an undirected material process. The theory does not challenge the idea of “evolution” defined as either change over time or common ancestry, but it does dispute Darwin’s idea that the cause of biological change is wholly blind and undirected. Either life arose as the result of purely undirected material processes or a guiding intelligence played a role. Design theorists affirm the latter option and argue that living organisms look designed because they really were designed.

A Brief History of the Design Argument

By making a case for design based on observations of natural phenomena, advocates of the contemporary theory of intelligent design have resuscitated the classical design argument. Prior to the publication of The Origin of Species by Charles Darwin in 1859, many Western thinkers, for over two thousand years, had answered the question “how did life arise?” by invoking the activity of a purposeful designer. Design arguments based on observations of the natural world were made by Greek and Roman philosophers such as Plato (1960: 279) and Cicero (1933: 217), by Jewish philosophers such as Maimonides and by Christian thinkers such as Thomas Aquinas[1](see Hick 1970: 1).

The idea of design also figured centrally in the modern scientific revolution (1500-1700). As historians of science (see Gillespie 1987: 1-49) have often pointed out, many of the founders of early modern science assumed that the natural world was intelligible precisely because they also assumed that it had been designed by a rational mind. In addition, many individual scientists – Johannes Kepler in astronomy (see Kepler 1981: 93-103; Kepler 1995: 170, 240),[2] John Ray in biology (see Ray 1701) and Robert Boyle in chemistry (see Boyle 1979: 172) – made specific design arguments based upon empirical discoveries in their respective fields. This tradition attained an almost majestic rhetorical quality in the writing of Sir Isaac Newton, who made both elegant and sophisticated design arguments based upon biological, physical and astronomical discoveries. Writing in the General Scholium to the Principia, Newton (1934: 543-44) suggested that the stability of the planetary system depended not only upon the regular action of universal gravitation, but also upon the very precise initial positioning of the planets and comets in relation to the sun. As he explained:

- [T]hough these bodies may, indeed, continue in their orbits by the mere laws of gravity, yet they could by no means have at first derived the regular position of the orbits themselves from those laws […] [Thus] [t]his most beautiful system of the sun, planets and comets, could only proceed from the counsel and dominion of an intelligent and powerful Being.

- Or as he wrote in the Opticks:

- How came the Bodies of Animals to be contrived with so much Art, and for what ends were their several parts? Was the Eye contrived without Skill in Opticks, and the Ear without Knowledge of Sounds? […] And these things being rightly dispatch’d, does it not appear from Phænomena that there is a Being incorporeal, living, intelligent, omnipresent […]. (Newton 1952: 369-70.)

Scientists continued to make such design arguments well into the early nineteenth century, especially in biology. By the later part of the 18th century, however, some enlightenment philosophers began to express skepticism about the design argument. In particular, David Hume, in his Dialogues Concerning Natural Religion (1779), argued that the design argument depended upon a flawed analogy with human artifacts. He admitted that artifacts derive from intelligent artificers, and that biological organisms have certain similarities to complex human artifacts. Eyes and pocket watches both depend upon the functional integration of many separate and specifically configured parts. Nevertheless, he argued, biological organisms also differ from human artifacts – they reproduce themselves, for example – and the advocates of the design argument fail to take these dissimilarities into account. Since experience teaches that organisms always come from other organisms, Hume argued that analogical argument really ought to suggest that organisms ultimately come from some primeval organism (perhaps a giant spider or vegetable), not a transcendent mind or spirit.

Despite this and other objections, Hume’s categorical rejection of the design argument did not prove entirely decisive with either theistic or secular philosophers. Thinkers as diverse as the Scottish Presbyterian Thomas Reid (1981: 59), the Enlightenment deist Thomas Paine (1925: 6) and the rationalist philosopher Immanuel Kant, continued to affirm[3] various versions of the design argument after the publication of Hume’s Dialogues. Moreover, with the publication of William Paley’s Natural Theology, science-based design arguments would achieve new popularity, both in Britain and on the continent. Paley (1852: 8-9) catalogued a host of biological systems that suggested the work of a superintending intelligence. Paley argued that the astonishing complexity and superb adaptation of means to ends in such systems could not originate strictly through the blind forces of nature, any more than could a complex machine such as a pocket watch. Paley also responded directly to Hume’s claim that the design inference rested upon a faulty analogy. A watch that could reproduce itself, he argued, would constitute an even more marvelous effect than one that could not. Thus, for Paley, the differences between artifacts and organisms only seemed to strengthen the conclusion of design. And indeed, despite the widespread currency of Hume’s objections, many scientists continued to find Paley’s watch-to-watchmaker reasoning compelling well into 19th century.

Darwin and the Eclipse of Design

Acceptance of the design argument began to abate during the late 19th century with the emergence of increasingly powerful materialistic explanations of apparent design in biology, particularly Charles Darwin’s theory of evolution by natural selection. Darwin argued in 1859 that living organisms only appeared to be designed. To make this case, he proposed a concrete mechanism, natural selection acting on random variations, that could explain the adaptation of organisms to their environment (and other evidences of apparent design) without actually invoking an intelligent or directing agency. Darwin saw that natural forces would accomplish the work of a human breeder and thus that blind nature could come to mimic, over time, the action of a selecting intelligence – a designer. If the origin of biological organisms could be explained naturalistically,[4] as Darwin (1964: 481-82) argued, then explanations invoking an intelligent designer were unnecessary and even vacuous.

Thus, it was not ultimately the arguments of the philosophers that destroyed the popularity of the design argument, but a scientific theory of biological origins. This trend was reinforced by the emergence of other fully naturalistic origins scenarios in astronomy, cosmology and geology. It was also reinforced (and enabled) by an emerging positivistic tradition in science that increasingly sought to exclude appeals to supernatural or intelligent causes from science by definition (see Gillespie 1979: 41-66, 82-108 for a discussion of this methodological shift). Natural theologians such as Robert Chambers, Richard Owen and Asa Gray, writing just prior to Darwin, tended to oblige this convention by locating design in the workings of natural law rather than in the complex structure or function of particular objects. While this move certainly made the natural theological tradition more acceptable to shifting methodological canons in science, it also gradually emptied it of any distinctive empirical content, leaving it vulnerable to charges of subjectivism and vacuousness. By locating design more in natural law and less in complex contrivances that could be understood by direct comparison to human creativity, later British natural theologians ultimately made their research program indistinguishable from the positivistic and fully naturalistic science of the Darwinians (Dembski 1996). As a result, the notion of design, to the extent it maintained any intellectual currency, soon became relegated to a matter of subjective belief. One could still believe that a mind superintended over the regular law-like workings of nature, but one might just as well assert that nature and its laws existed on their own. Thus, by the end of the nineteenth century, natural theologians could no longer point to any specific artifact of nature that required intelligence as a necessary explanation. As a result, intelligent design became undetectable except “through the eyes of faith.”

Though the design argument in biology went into retreat after the publication of The Origin, it never quite disappeared. Darwin was challenged by several leading scientists of his day, most forcefully by the great Harvard naturalist Louis Agassiz, who argued that the sudden appearance of the first complex animal forms in the Cambrian fossil record pointed to “an intellectual power” and attested to “acts of mind.” Similarly, the co-founder of the theory of evolution by natural selection, Alfred Russel Wallace (1991: 33-34), argued that some things in biology were better explained by reference to the work of a “Higher intelligence” than by reference to Darwinian evolution. There seemed to him “to be evidence of a Power” guiding the laws of organic development “in definite directions and for special ends.” As he put it, “[S]o far from this view being out of harmony with the teachings of science, it has a striking analogy with what is now taking place in the world.” And in 1897, Oxford scholar F.C.S. Schiller argued that “it will not be possible to rule out the supposition that the process of Evolution may be guided by an intelligent design” (Schiller 1903: 141).

This continued interest in the design hypothesis was made possible in part because the mechanism of natural selection had a mixed reception in the immediate post-Darwinian period. As the historian of biology Peter Bowler (1986: 44-50) has noted, classical Darwinism entered a period of eclipse during the late 19th and early 20th centuries mainly because Darwin lacked an adequate theory for the origin and transmission of new heritable variation. Natural selection, as Darwin well understood, could accomplish nothing without a steady supply of genetic variation, the ultimate source of new biological structure. Nevertheless, both the blending theory of inheritance that Darwin had assumed and the classical Mendelian genetics that soon replaced it, implied limitations on the amount of genetic variability available to natural selection. This in turn implied limits on the amount of novel structure that natural selection could produce.

By the late 1930s and 1940s, however, natural selection was revived as the main engine of evolutionary change as developments in a number of fields helped to clarify the nature of genetic variation. The resuscitation of the variation / natural selection mechanism by modern genetics and population genetics became known as the neo-Darwinian synthesis. According to the new synthetic theory of evolution, the mechanism of natural selection acting upon random variations (especially including small-scale mutations) sufficed to account for the origin of novel biological forms and structures. Small-scale “microevolutionary” changes could be extrapolated indefinitely to account for large-scale “macroevolutionary” development. With the revival of natural selection, the neo-Darwinists would assert, like Darwinists before them, that they had found a “designer substitute” that could explain the appearance of design in biology as the result of an entirely undirected natural process.[5] As Harvard evolutionary biologist Ernst Mayr (1982: xi-xii) has explained, “[T]he real core of Darwinism […] is the theory of natural selection. This theory is so important for the Darwinian because it permits the explanation of adaptation, the ‘design’ of the natural theologian, by natural means.” By the centennial celebration of Darwin’s Origin of Species in 1959, it was assumed by many scientists that natural selection could fully explain the appearance of design and that, consequently, the design argument in biology was dead.

Problems with the Neo-Darwinian Synthesis

Since the late 1960s, however, the modern synthesis that emerged during the 1930s, 1940s and 1950s has begun to unravel in the face of new developments in paleontology, systematics, molecular biology, genetics and developmental biology. Since then a series of technical articles and books – including such recent titles as Evolution: a Theory in Crisis (1986) by Michael Denton, Darwinism: The Refutation of a Myth (1987) by Soren Lovtrup, The Origins of Order (1993) by Stuart A. Kauffman, How The Leopard Changed Its Spots (1994) by Brian C. Goodwin, Reinventing Darwin (1995) by Niles Eldredge, The Shape of Life (1996) by Rudolf A. Raff, Darwin’s Black Box (1996) by Michael Behe, The Origin of Animal Body Plans (1997) by Wallace Arthur, Sudden Origins: Fossils, Genes, and the Emergence of Species (1999) by Jeffrey H. Schwartz – have cast doubt on the creative power of neo-Darwinism’s mutation/selection mechanism. As a result, a search for alternative naturalistic mechanisms of innovation has ensued with, as yet, no apparent success or consensus. So common are doubts about the creative capacity of the selection / mutation mechanism, neo-Darwinism’s “designer substitute,” that prominent spokesmen for evolutionary theory must now periodically assure the public that “just because we don’t know how evolution occurred, does not justify doubt about whether it occurred.”[6] As Niles Eldredge (1982: 508-9) wrote, “Most observers see the current situation in evolutionary theory – where the object is to explain how, not if, life evolves – as bordering on total chaos.” Or as Stephen Gould (1980: 119-20) wrote, “The neo-Darwinism synthesis is effectively dead, despite its continued presence as textbook orthodoxy.” (See also Müller and Newman 2003: 3-12.)

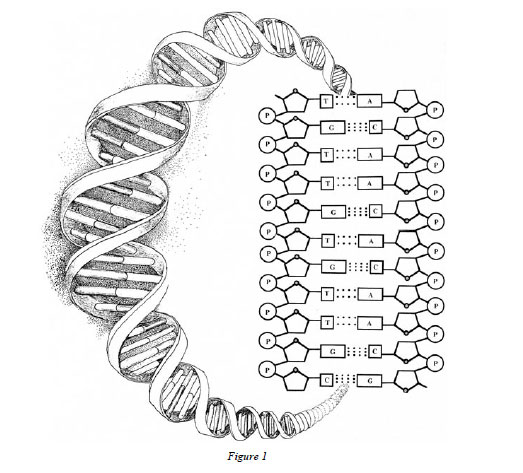

Soon after Gould and Eldredge acknowledged these difficulties, the first important books (Thaxton, et al. 1984; Denton 1985) advocating the idea of intelligent design as an alternative to neo-Darwinism began to appear in the United States and Britain.[7] But the scientific antecedents of the modern theory of intelligent design can be traced back to the beginning of the molecular biological revolution. In 1953 when Watson and Crick elucidated the structure of the DNA molecule, they made a startling discovery. The structure of DNA allows it to store information in the form of a four-character digital code. (See Figure 1). Strings of precisely sequenced chemicals called nucleotide bases store and transmit the assembly instructions – the information – for building the crucial protein molecules and machines the cell needs to survive.

Francis Crick later developed this idea with his famous “sequence hypothesis” according to which the chemical constituents in DNA function like letters in a written language or symbols in a computer code. Just as English letters may convey a particular message depending on their arrangement, so too do certain sequences of chemical bases along the spine of a DNA molecule convey precise instructions for building proteins. The arrangement of the chemical characters determines the function of the sequence as a whole. Thus, the DNA molecule has the same property of “sequence specificity” or “specified complexity” that characterizes codes and language. As Richard Dawkins has acknowledged, “the machine code of the genes is uncannily computer-like” (Dawkins 1995: 11). As Bill Gates has noted, “DNA is like a computer program but far, far more advanced than any software ever created” (Gates 1995:188). After the early 1960s, further discoveries made clear that the digital information in DNA and RNA is only part of a complex information processing system – an advanced form of nanotechnology that both mirrors and exceeds our own in its complexity, design logic and information storage density.

Thus, even as the design argument was being declared dead at the Darwinian centennial at the close of the 1950s, evidence that many scientists would later see as pointing to design was being uncovered in the nascent discipline of molecular biology. In any case, discoveries in this field would soon generate a growing rumble of voices dissenting from neo-Darwinism. In By Design, a history of the current design controversy, journalist Larry Witham (2003) traces the immediate roots of the theory of intelligent design in biology to the 1960s, at which time developments in molecular biology were generating new problems for the neo-Darwinian synthesis. At this time, mathematicians, engineers and physicists were beginning to express doubts that random mutations could generate the genetic information needed to produce crucial evolutionary transitions in the time available to the evolutionary process. Among the most prominent of these skeptical scientists were several from the Massachusetts Institute of Technology.

These researchers might have gone on talking among themselves about their doubts but for an informal gathering of mathematicians and biologists in Geneva in the mid-1960s at the home of MIT physicist Victor Weisskopf. During a picnic lunch the discussion turned to evolution, and the mathematicians expressed surprise at the biologists’ confidence in the power of mutations to assemble the genetic information necessary to evolutionary innovation. Nothing was resolved during the argument that ensued, but those present found the discussion stimulating enough that they set about organizing a conference to probe the issue further. This gathering occurred at the Wistar Institute in Philadelphia in the spring of 1966 and was chaired by Sir Peter Medawar, Nobel Laureate and director of North London’s Medical Research Council’s laboratories. In his opening remarks at the meeting, he said that the “immediate cause of this conference is a pretty widespread sense of dissatisfaction about what has come to be thought of as the accepted evolutionary theory in the English-speaking world, the so-called neo-Darwinian theory” (Taylor 1983: 4).

The mathematicians were now in the spotlight and they took the opportunity to argue that neo-Darwinism faced a formidable combinatorial problem (see Moorhead and Kaplan 1967 for the seminar proceedings).[8] In their view, the ratio of the number of functional genes and proteins, on the one hand, to the enormous number of possible sequences corresponding to a gene or protein of a given length, on the other, seemed so small as to preclude the origin of genetic information by a random mutational search. A protein one hundred amino acids in length represents an extremely unlikely occurrence. There are roughly 10130 possible amino acid sequences of this length, if one considers only the 20 protein-forming acids as possibilities. The vast majority of these sequences – it was (correctly) assumed – perform no biological function (see Axe 2004: 1295-1314 for a rigorous experimental evaluation of the rarity of functional proteins within the “sequence space” of possible combinations). Would an undirected search through this enormous space of possible sequences have a realistic chance of finding a functional sequence in the time allotted for crucial evolutionary transitions? To many of the Wistar mathematicians and physicists, the answer seemed clearly ‘no.’ Distinguished French mathematician M. P. Schützenberger (1967: 73-5) noted that in human codes, randomness is never the friend of function, much less of progress. When we make changes randomly to computer programs, “we find that we have no chance (i.e. less than 1/101000) even to see what the modified program would compute: it just jams.” MIT’s Murray Eden illustrated with reference to an imaginary library evolving by random changes to a single phrase: “Begin with a meaningful phrase, retype it with a few mistakes, make it longer by adding letters, and rearrange subsequences in the string of letters; then examine the result to see if the new phrase is meaningful. Repeat until the library is complete” (Eden 1967: 110). Would such an exercise have a realistic chance of succeeding, even granting it billions of years? At Wistar, the mathematicians, physicists and engineers argued that it would not. And they insisted that a similar problem confronts any mechanism that relies on random mutations to search large combinatorial spaces for sequences capable of performing novel function – even if, as is the case in biology, some mechanism of selection can act after the fact to preserve functional sequences once they have arisen.

Just as the mathematicians at Wistar were casting doubt on the idea that chance (i.e., random mutations) could generate genetic information, another leading scientist was raising questions about the role of law-like necessity. In 1967 and 1968, the Hungarian chemist and philosopher of science Michael Polanyi published two articles suggesting that the information in

DNA was “irreducible” to the laws of physics and chemistry (Polanyi 1967: 21; Polanyi 1968: 1308-12). In these papers, Polanyi noted that the DNA conveys information in virtue of very specific arrangements of the nucleotide bases (that is, the chemicals that function as alphabetic or digital characters) in the genetic text. Yet, Polanyi also noted the laws of physics and chemistry allow for a vast number of other possible arrangements of these same chemical constituents. Since chemical laws allow a vast number of possible arrangements of nucleotide bases, Polanyi reasoned that no specific arrangement was dictated or determined by those laws. Indeed, the chemical properties of the nucleotide bases allow them to attach themselves interchangeably at any site on the (sugar-phosphate) backbone of the DNA molecule. (See Figure 1). Thus, as Polanyi (1968: 1309) noted, “As the arrangement of a printed page is extraneous to the chemistry of the printed page, so is the base sequence in a DNA molecule extraneous to the chemical forces at work in the DNA molecule.” Polanyi argued that it is precisely this chemical indeterminacy that allows DNA to store information and which also shows the irreducibility of that information to physical-chemical laws or forces. As he explained:

- Suppose that the actual structure of a DNA molecule were due to the fact that the bindings of its bases were much stronger than the bindings would be for any other distribution of bases, then such a DNA molecule would have no information content. Its code-like character would be effaced by an overwhelming redundancy. […] Whatever may be the origin of a DNA configuration, it can function as a code only if its order is not due to the forces of potential energy. It must be as physically indeterminate as the sequence of words is on a printed page (Polanyi 1968:1309).

The Mystery of Life’s Origin

As more scientists began to express doubts about the ability of undirected processes to produce the genetic information necessary to living systems, some began to consider an alternative approach to the problem of the origin of biological form and information. In 1984, after seven years of writing and research, chemist Charles Thaxton, polymer scientist Walter Bradley and geochemist Roger Olsen published a book proposing “an intelligent cause” as an explanation for the origin of biological information. The book was titled The Mystery of Life’s Origin and was published by The Philosophical Library, then a prestigious New York scientific publisher that had previously published more than twenty Nobel laureates.

Thaxton, Bradley and Olsen’s work directly challenged reigning chemical evolutionary explanations of the origin-of-life, and old scientific paradigms do not, to borrow from a Dylan Thomas poem, “go gently into that good night.” Aware of the potential opposition to their ideas, Thaxton flew to California to meet with one of the world’s top chemical evolutionary theorists, San Francisco State University biophysicist Dean Kenyon, co-author of a leading monograph on the subject, Biochemical Predestination. Thaxton wanted to talk with Kenyon to ensure that Mystery’s critiques of leading origin-of-life theories (including Kenyon’s), were fair and accurate. But Thaxton also had a second and more audacious motive: he planned to ask Kenyon to write the foreword to the book, even though Mystery critiqued the very originof-life theory that had made Kenyon famous in his field.

One can imagine how such a meeting might have unfolded, with Thaxton’s bold plan quietly dying in a corner of Kenyon’s office as the two men came to loggerheads over their competing theories. But fortunately for Thaxton, things went better than expected. Before he had worked his way around to making his request, Kenyon volunteered for the job, explaining that he had been moving toward Thaxton’s position for some time (Charles Thaxton, interview by Jonathan Witt, August 16, 2005; Jon Buell, interview by Jonathan Witt, September 21, 2005).

Kenyon’s bestselling origin-of-life text, Biochemical Predestination, had outlined what was then arguably the most plausible evolutionary account of how a living cell might have organized itself from chemicals in the “primordial soup.” Already by the 1970s, however, Kenyon was questioning his own hypothesis. Experiments (some performed by Kenyon himself) increasingly suggested that simple chemicals do not arrange themselves into complex information-bearing molecules such as proteins and DNA without guidance from human investigators. Thaxton, Bradley and Olsen appealed to this fact in constructing their argument, and Kenyon found their case both well-reasoned and well-researched. In the foreword he went on to pen, he described The Mystery of Life’s Origin as “an extraordinary new analysis of an age-old question” (Kenyon 1984: v).

The book eventually became the best-selling advanced college-level work on chemical evolution, with sales fueled by endorsements from leading scientists such as Kenyon, Robert Shapiro and Robert Jastrow and by favorable reviews in prestigious journals such as the Yale Journal of Biology and Medicine.[9] Others dismissed the work as going beyond science.

What was their idea, and why did it generate interest among leading scientists? First, Mystery critiqued all of the current, purely materialistic explanations for the origin of life. In the process, they showed that the famous Miller-Urey experiment did not simulate early Earth conditions, that the existence of an early Earth pre-biotic soup was a myth, that important chemical evolutionary transitions were subject to destructive interfering cross-reactions, and that neither chance nor energy-flow could account for the information in biopolymers such as proteins and DNA. But it was in the book’s epilogue that the three scientists proposed a radically new hypothesis. There they suggested that the information-bearing properties of DNA might point to an intelligent cause. Drawing on the work of Polanyi and others, they argued that chemistry and physics alone couldn’t produce information any more than ink and paper could produce the information in a book. Instead, they argued that our uniform experience suggests that information is the product of an intelligent cause:

- We have observational evidence in the present that intelligent investigators can (and do) build contrivances to channel energy down nonrandom chemical pathways to bring about some complex chemical synthesis, even gene building. May not the principle of uniformity then be used in a broader frame of consideration to suggest that DNA had an intelligent cause at the beginning? (Thaxton et al. 1984: 211.)

Mystery also made the radical claim that intelligent causes could be legitimately considered as scientific hypotheses within the historical sciences, a mode of inquiry they called origins science.

Their book marked the beginning of interest in the theory of intelligent design in the United States, inspiring a generation of younger scholars (see Denton 1985; Denton 1986; Kenyon and Mills 1996: 9-16; Behe 2004: 352-370; Dembski 2002; Dembski 2004: 311-330; Morris 2000: 1-11; Morris 2003a: 13-32; Morris 2003b: 505-515; Lönnig 2001; Lönnig and Saedler 2002: 389-410; Nelson and Wells 2003: 303-322; Meyer 2003a: 223-285; Meyer 2003b: 371391; Bradley 2004: 331-351) to investigate the question of whether there is actual design in living organisms rather than, as neo-Darwinian biologists and chemical evolutionary theorists had long claimed, the mere appearance of design. At the time the book appeared, I was working as a geophysicist for the Atlantic Richfield Company in Dallas where Charles Thaxton happened to live. I later met him at a scientific conference and became intrigued with the radical idea he was developing about DNA. I began dropping by his office after work to discuss the arguments made in his book. Intrigued, but not yet fully convinced, the next year I left my job as a geophysicist to pursue a Ph.D. at The University of Cambridge in the history and philosophy of science. During my Ph.D. research, I investigated several questions that had emerged in my discussions with Thaxton. What methods do scientists use to study biological origins? Is there a distinctive method of historical scientific inquiry? After completing my

Ph.D., I would take up another question: Could the argument from DNA to design be formulated as a rigorous historical scientific argument?

Of Clues and Causes

During my Ph.D. research at Cambridge, I found that historical sciences (such as geology, paleontology and archeology) do employ a distinctive method of inquiry. Whereas many scientific fields involve an attempt to discover universal laws, historical scientists attempt to infer past causes from present effects. As Stephen Gould (1986: 61) put it, historical scientists are trying to “infer history from its results.” Visit the Royal Tyrrell Museum in Alberta, Canada and you will find there a beautiful reconstruction of the Cambrian seafloor with its stunning assemblage of phyla. Or read the fourth chapter of Simon Conway Morris’s book on the Burgess Shale and you will be taken on a vivid guided tour of that long-ago place. But what Morris (1998: 63-115) and the museum scientists did in both cases was to imaginatively reconstruct the ancient Cambrian site from an assemblage of present-day fossils. In other words, paleontologists infer a past situation or cause from present clues.

A key figure in elucidating the special nature of this mode of reasoning was a contemporary of Darwin, polymath William Whewell, master of Trinity College, Cambridge and best known for two books about the nature of science, History of the Inductive Sciences (1837) and The Philosophy of the Inductive Sciences (1840). Whewell distinguished inductive sciences like mechanics (physics) from what he called palaetiology – historical sciences that are defined by three distinguishing features. First, the palaetiological or historical sciences have a distinctive object: to determine “ancient condition[s]” (Whewell 1857, vol. 3: 397) or past causal events. Second, palaetiological sciences explain present events (“manifest effects”) by reference to past (causal) events rather than by reference to general laws (though laws sometimes play a subsidiary role). And third, in identifying a “more ancient condition,” Whewell believed palaetiology utilized a distinctive mode of reasoning in which past conditions were inferred from “manifest effects” using generalizations linking present clues with past causes (Whewell 1840, vol. 2: 121-22, 101-103).

Inference to the Best Explanation

This type of inference is called abductive reasoning. It was first described by the American philosopher and logician C.S. Peirce. He noted that, unlike inductive reasoning, in which a universal law or principle is established from repeated observations of the same phenomena, and unlike deductive reasoning, in which a particular fact is deduced by applying a general law or rule to another particular fact or case, abductive reasoning infers unseen facts, events or causes in the past from clues or facts in the present.

As Peirce himself showed, however, there is a problem with abductive reasoning. Consider the following syllogism:

- If it rains, the streets will get wet.

- The streets are wet.

- Therefore, it rained.

This syllogism infers a past condition (i.e., that it rained) but it commits a logical fallacy known as affirming the consequent. Given that the street is wet (and without additional evidence to decide the matter), one can only conclude that perhaps it rained. Why? Because there are many other possible ways by which the street may have gotten wet. Rain may have caused the streets to get wet; a street cleaning machine might have caused them to get wet; or an uncapped fire hydrant might have done so. It can be difficult to infer the past from the present because there are many possible causes of a given effect.

Peirce’s question was this: how is it that, despite the logical problem of affirming the consequent, we nevertheless frequently make reliable abductive inferences about the past? He noted, for example, that no one doubts the existence of Napoleon. Yet we use abductive reasoning to infer Napoleon’s existence. That is, we must infer his past existence from present effects. But despite our dependence on abductive reasoning to make this inference, no sane or educated person would doubt that Napoleon Bonaparte actually lived. How could this be if the problem of affirming the consequent bedevils our attempts to reason abductively? Peirce’s answer was revealing: “Though we have not seen the man [Napoleon], yet we cannot explain what we have seen without” the hypothesis of his existence (Peirce, 1932, vol. 2: 375). Peirce’s words imply that a particular abductive hypothesis can be strengthened if it can be shown to explain a result in a way that other hypotheses do not, and that it can be reasonably believed (in practice) if it explains in a way that no other hypotheses do. In other words, an abductive inference can be enhanced if it can be shown that it represents the best or the only adequate explanation of the “manifest effects” (to use Whewell’s term).

As Peirce pointed out, the problem with abductive reasoning is that there is often more than one cause that can explain the same effect. To address this problem, pioneering geologist Thomas Chamberlain (1965: 754-59) delineated a method of reasoning that he called “the method of multiple working hypotheses.” Geologists and other historical scientists use this method when there is more than one possible cause or hypothesis to explain the same evidence. In such cases, historical scientists carefully weigh the evidence and what they know about various possible causes to determine which best explains the clues before them. In modern times, contemporary philosophers of science have called this the method of inference to the best explanation. That is, when trying to explain the origin of an event or structure in the past, historical scientists compare various hypotheses to see which would, if true, best explain it. They then provisionally affirm that hypothesis that best explains the data as the most likely to be true.

Causes Now in Operation

But what constitutes the best explanation for the historical scientist? My research showed that among historical scientists it’s generally agreed that best doesn’t mean ideologically satisfying or mainstream; instead, best generally has been taken to mean, first and foremost, most causally adequate. In other words, historical scientists try to identify causes that are known to produce the effect in question. In making such determinations, historical scientists evaluate hypotheses against their present knowledge of cause and effect; causes that are known to produce the effect in question are judged to be better causes than those that are not. For instance, a volcanic eruption is a better explanation for an ash layer in the earth than an earthquake because eruptions have been observed to produce ash layers, whereas earthquakes have not.

This brings us to the great geologist Charles Lyell, a figure who exerted a tremendous influence on 19th century historical science generally and on Charles Darwin specifically. Darwin read Lyell’s magnum opus, The Principles of Geology, on the voyage of the Beagle and later appealed to its uniformitarian principles to argue that observed micro-evolutionary processes of change could be used to explain the origin of new forms of life. The subtitle of Lyell’s Principles summarized the geologist’s central methodological principle: “Being an Attempt to Explain the Former Changes of the Earth’s Surface, by Reference to Causes now in Operation.” Lyell argued that when historical scientists are seeking to explain events in the past, they should not invoke unknown or exotic causes, the effects of which we do not know, but instead they should cite causes that are known from our uniform experience to have the power to produce the effect in question (i.e., “causes now in operation”).

Darwin subscribed to this methodological principle. His term for a “presently acting cause” was a vera causa, that is, a true or actual cause. In other words, when explaining the past, historical scientists should seek to identify established causes – causes known to produce the effect in question. For example, Darwin tried to show that the process of descent with modification was the vera causa of certain kinds of patterns found among living organisms. He noted that diverse organisms share many common features. He called these homologies and noted that we know from experience that descendents, although they differ from their ancestors, also resemble them in many ways, usually more closely than others who are more distantly related. So he proposed descent with modification as a vera causa for homologous structures. That is, he argued that our uniform experience shows that the process of descent with modification from a common ancestor is “causally adequate” or capable of producing homologous features.

And Then There Was One

Contemporary philosophers agree that causal adequacy is the key criteria by which competing hypotheses are adjudicated, but they also show that this process leads to secure inferences only where it can be shown that there is just one known cause for the evidence in question. Philosophers of science Michael Scriven and Elliot Sober, for example, point out that historical scientists can make inferences about the past with confidence when they discover evidence or artifacts for which there is only one cause known to be capable of producing them. When historical scientists infer to a uniquely plausible cause, they avoid the fallacy of affirming the consequent and the error of ignoring other possible causes with the power to produce the same effect. It follows that the process of determining the best explanation often involves generating a list of possible hypotheses, comparing their known or theoretically plausible causal powers with respect to the relevant data, and then like a detective attempting to identify the murderer, progressively eliminating potential but inadequate explanations until, finally, one remaining causally adequate explanation can be identified as the best. As Scriven (1966: 250) explains, such abductive reasoning (or what he calls “Reconstructive causal analysis”) “proceeds by the elimination of possible causes,” a process that is essential if historical scientists are to overcome the logical limitations of abductive reasoning.

The matter can be framed in terms of formal logic. As C.S. Peirce noted, arguments of the form:

- ifX, then Y

- Y

- therefore X

commit the fallacy of affirming the consequent. Nevertheless, as Michael Scriven (1959: 480), Elliot Sober (1988: 1-5), W.P. Alston (1971: 23) and W.B. Gallie (1959: 392) have observed, such arguments can be restated in a logically acceptable form if it can be shown that Y has only one known cause (i.e., X) or that X is a necessary condition (or cause) of Y. Thus, arguments of the form:

- X is antecedently necessary to Y,

- Y exists,

- Therefore, X existed

are accepted as logically valid by philosophers and persuasive by historical and forensic scientists. Scriven especially emphasized this point: if scientists can discover an effect for which there is only one plausible cause, they can infer the presence or action of that cause in the past with great confidence. For instance, the archaeologist who knows that human scribes are the only known cause of linguistic inscriptions will infer scribal activity upon discovering tablets containing ancient writing.

In many cases, of course, the investigator will have to work his way to a unique cause one painstaking step at a time. For instance, both wind shear and compressor blade failure could explain an airline crash, but the forensic investigator will want to know which one did, or if the true cause lies elsewhere. Ideally, the investigator will be able to discover some crucial piece of evidence or suite of evidences for which there is only one known cause, allowing him to distinguish between competing explanations and eliminate every explanation but the correct one.

In my study of the methods of the historical sciences, I found that historical scientists, like detectives and forensic experts, routinely employ this type of abductive and eliminative reasoning in their attempts to infer the best explanation.[10] In fact, Darwin himself employed this method in The Origin of Species. There he argued for his theory of Universal Common Descent, not because it could predict future outcomes under controlled experimental conditions, but because it could explain already known facts better than rival hypotheses. As he explained in a letter to Asa Gray:

- I […] test this hypothesis [Universal Common Descent] by comparison with as many general and pretty well-established propositions as I can find – in geographical distribution, geological history, affinities &c., &c. And it seems to me that, supposing that such a hypothesis were to explain such general propositions, we ought, in accordance with the common way of following all sciences, to admit it till some better hypothesis be found out. (Darwin 1896, vol. 1: 437.)

DNA by Design: Developing the Argument from Information

What does this investigation into the nature of historical scientific reasoning have to do with intelligent design, the origin of biological information and the mystery of life’s origin? For me, it was critically important to deciding whether the design hypothesis could be formulated as a rigorous scientific explanation as opposed to just an intriguing intuition. I knew from my study of origin-of-life research that the central question facing scientists trying to explain the origin of the first life was this: how did the sequence-specific digital information (stored in DNA and RNA) necessary to building the first cell arise? As Bernd-Olaf Küppers (1990: 170-172) put it, “the problem of the origin of life is clearly basically the equivalent to the problem of the origin of biological information.” My study of the methodology of the historical sciences then led me to ask a series of questions: What is the presently acting cause of the origin of digital information? What is the vera causa of such information? Or: what is the “only known cause” of this effect? Whether I used Lyell’s, Darwin’s or Scriven’s terminology, the question was the same: what type of cause has demonstrated the power to generate information? Based upon both common experience and my knowledge of the many failed attempts to solve the problem with “unguided” pre-biotic simulation experiments and computer simulations, I concluded that there is only one sufficient or “presently acting” cause of the origin of such functionally-specified information. And that cause is intelligence. In other words, I concluded, based on our experience-based understanding of the cause-and-effect structure of the world, that intelligent design is the best explanation for the origin of the information necessary to build the first cell. Ironically, I discovered that if one applies Lyell’s uniformitarian method – a practice much maligned by young earth creationists – to the question of the origin of biological information, the evidence from molecular biology supports a new and rigorous scientific argument to design.

What is Information?

In order to develop this argument and avoid equivocation, it was necessary to carefully define what type of information was present in the cell (and what type of information might, based upon our uniform experience, indicate the prior action of a designing intelligence). Indeed, part of the historical scientific method of reasoning involves first defining what philosophers of science call the explanandum – the entity that needs to be explained. As the historian of biology Harmke Kamminga (1986: 1) has observed, “At the heart of the problem of the origin of life lies a fundamental question: What is it exactly that we are trying to explain the origin of?” Contemporary biology had shown that the cell was, among other things, a repository of information. For this reason, origin-of-life studies had focused increasingly on trying to explain the origin of that information. But what kind of information is present in the cell? This was an important question to answer because the term “information” can be used to denote several theoretical

[…] A Scientific History and Philosophical Defense of the Theory of Intelligent Design […]